I want to describe a secure way to pass a string-parameter containing a comma-separated list of column names to include in a result set. This can be useful when you’re often querying the same tables with the same predicates, but wish to include different columns in the result set for different purposes.

To setup our example, we’re going to use two tables in the AdventureWorks2022 database and the two tables Sales.SalesOrderHeader and Sales.SalesOrderDetail.

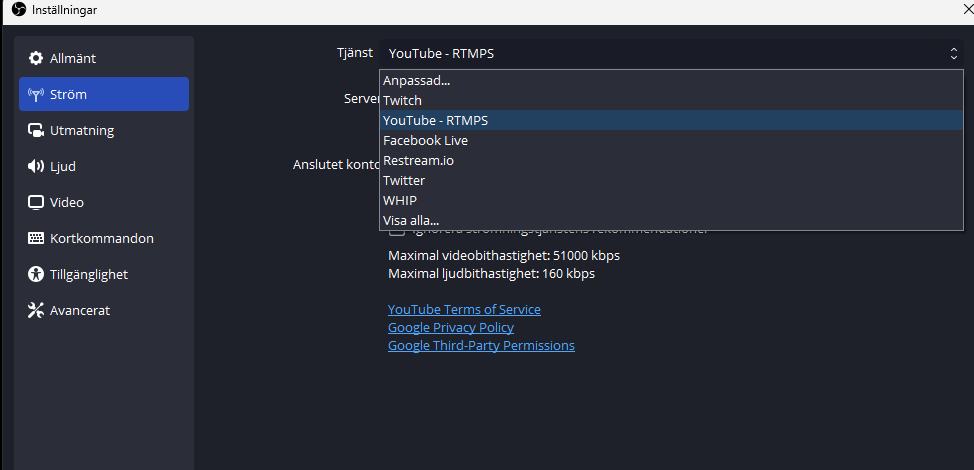

Our first version of the Stored Procedure has a fixed column list, so no dynamic columns in the result set.

As you can see, we now have a stored procedure that returns some information about orders and line details.

Moving on, we now want to change this stored procedure, so that it can return a more dynamic column list. Perhaps some parts of an application isn’t interested in Totals at all, and other parts of an application isn’t interested in DueDate or ShipDate at all, but the query apart from that more or less the same.

Let’s start with an example of how NOT to do it. First the code, and then I will show why the below is really bad idea.

Although this works, sort of it opens up our database for SQL injection.

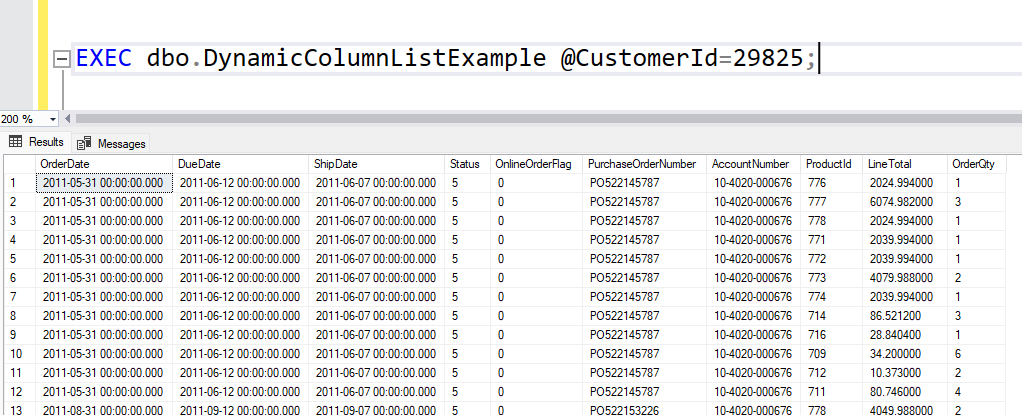

We can do this:

EXEC dbo.DynamicColumnListExample @CustomerId=29825, @ColumnList=N'OrderDate, ShipDate, OnlineOrderFlag';

And

it will indeed give us the desired result set, with three columns.

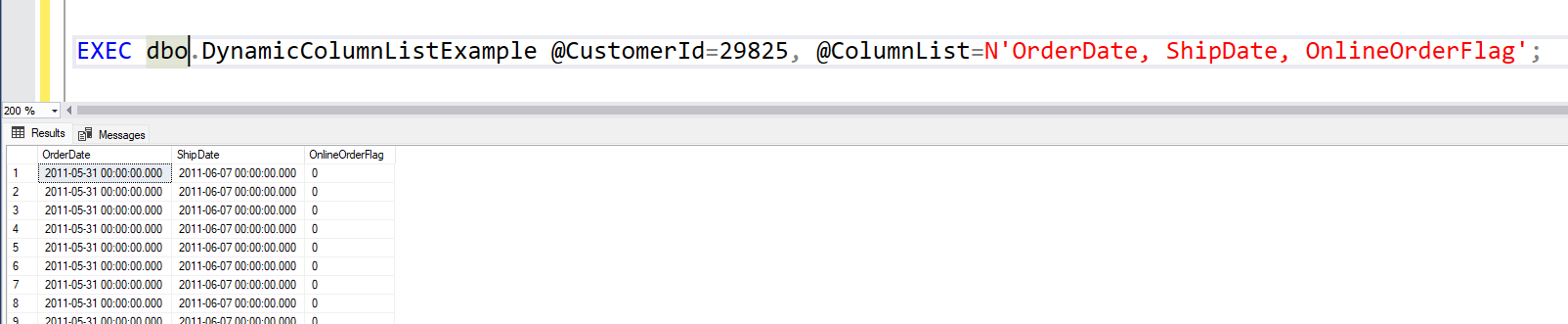

But we can also do this.

EXEC dbo.DynamicColumnListExample @CustomerId=29825, @ColumnList=N'OrderDate, ShipDate, OnlineOrderFlag from (values('''','''',''''))t(orderdate,shipdate,onlineorderflag);--

select * from sys.tables;

select * '

Wait, what???!!!

This will generate the following SQL-statements and the Stored Procedure will happily execute them with sp_executesql.

SELECT OrderDate, ShipDate, OnlineOrderFlag from (values('','',''))t(orderdate,shipdate,onlineorderflag);--

select * from sys.tables;

select *

FROM Sales.SalesOrderHeader AS SOH

INNER JOIN Sales.SalesOrderDetail AS SOD

ON SOD.SalesOrderID = SOH.SalesOrderID

WHERE SOH.CustomerID = @CustomerId;

So first a result set with three empty strings.

Then all the rows from sys.tables.

And finally all the columns from Sales.SalesOrderHeader and Sales.SalesOrderDetail for a given customer. Not at all what we were looking for, right?

But what if we still want to allow for dynamic column lists? We DO want to use dynamic SQL. But we can’t really do it this way. The way to secure this is to use the STRING_SPLIT function to convert the ColumnList parameter to a data set, and then convert it back to a comma separated list with STRING_AGG, but apply the QUOTENAME function to each element. Like this:

DECLARE @columnListSecure NVARCHAR(MAX); SELECT @columNListSecure = STRING_AGG(QUOTENAME(TRIM(BOTH ' ' FROM value)),',') FROM STRING_SPLIT(@ColumnList,',') AS SS

And then we use columnListSecure instead of columnList to build our column list in the dynamic SQL-statement.

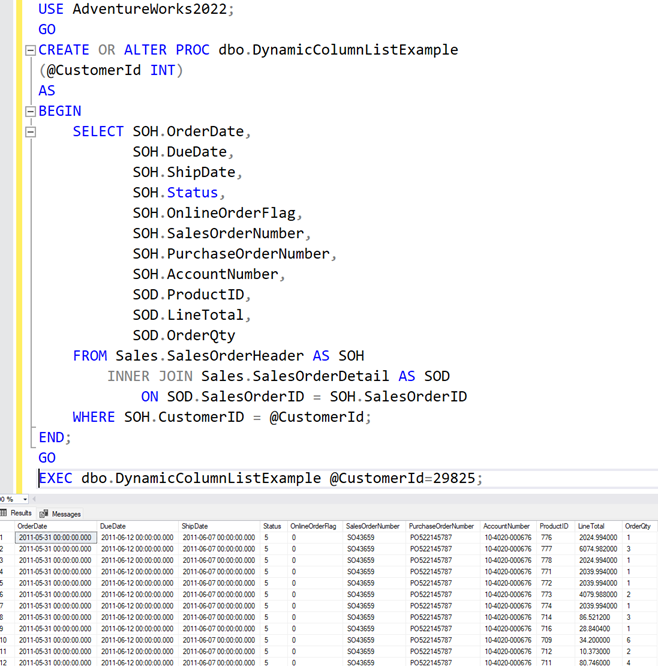

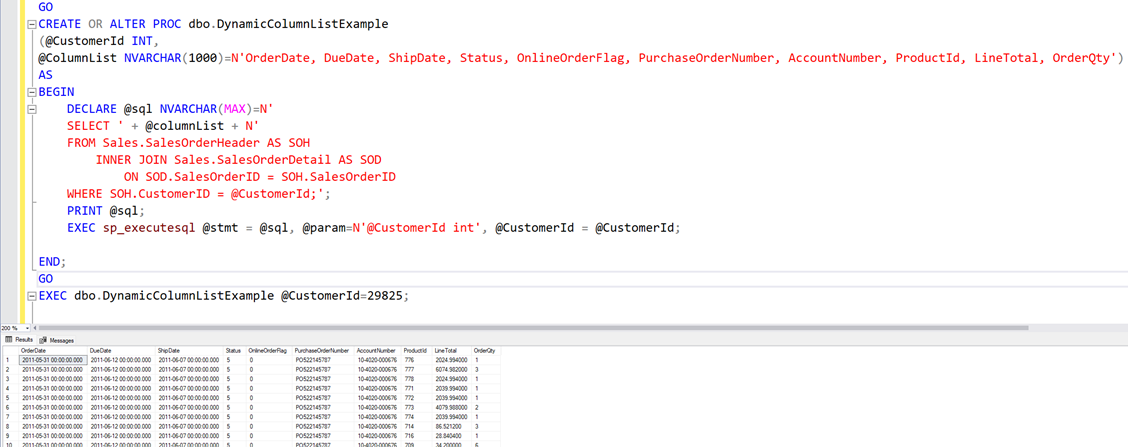

Our final procedure looks like this:

CREATE OR ALTER PROC dbo.DynamicColumnListExample

(

@CustomerId INT,

@ColumnList NVARCHAR(1000) = N'OrderDate, DueDate, ShipDate, Status, OnlineOrderFlag, PurchaseOrderNumber, AccountNumber, ProductId, LineTotal, OrderQty'

)

AS

BEGIN

DECLARE @columnListSecure NVARCHAR(MAX);

SELECT @columnListSecure = STRING_AGG(QUOTENAME(TRIM(BOTH ' ' FROM value)), ',')

FROM STRING_SPLIT(@ColumnList, ',') AS SS;

DECLARE @sql NVARCHAR(MAX)

= N'

SELECT ' + @columnListSecure

+ N'

FROM Sales.SalesOrderHeader AS SOH

INNER JOIN Sales.SalesOrderDetail AS SOD

ON SOD.SalesOrderID = SOH.SalesOrderID

WHERE SOH.CustomerID = @CustomerId;';

PRINT @sql;

EXEC sp_executesql @stmt = @sql,

@param = N'@CustomerId int',

@CustomerId = @CustomerId;

END;

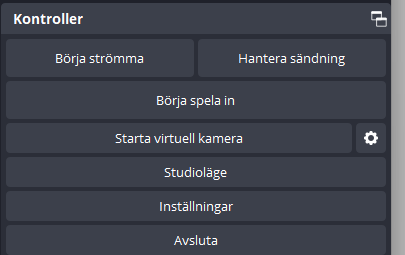

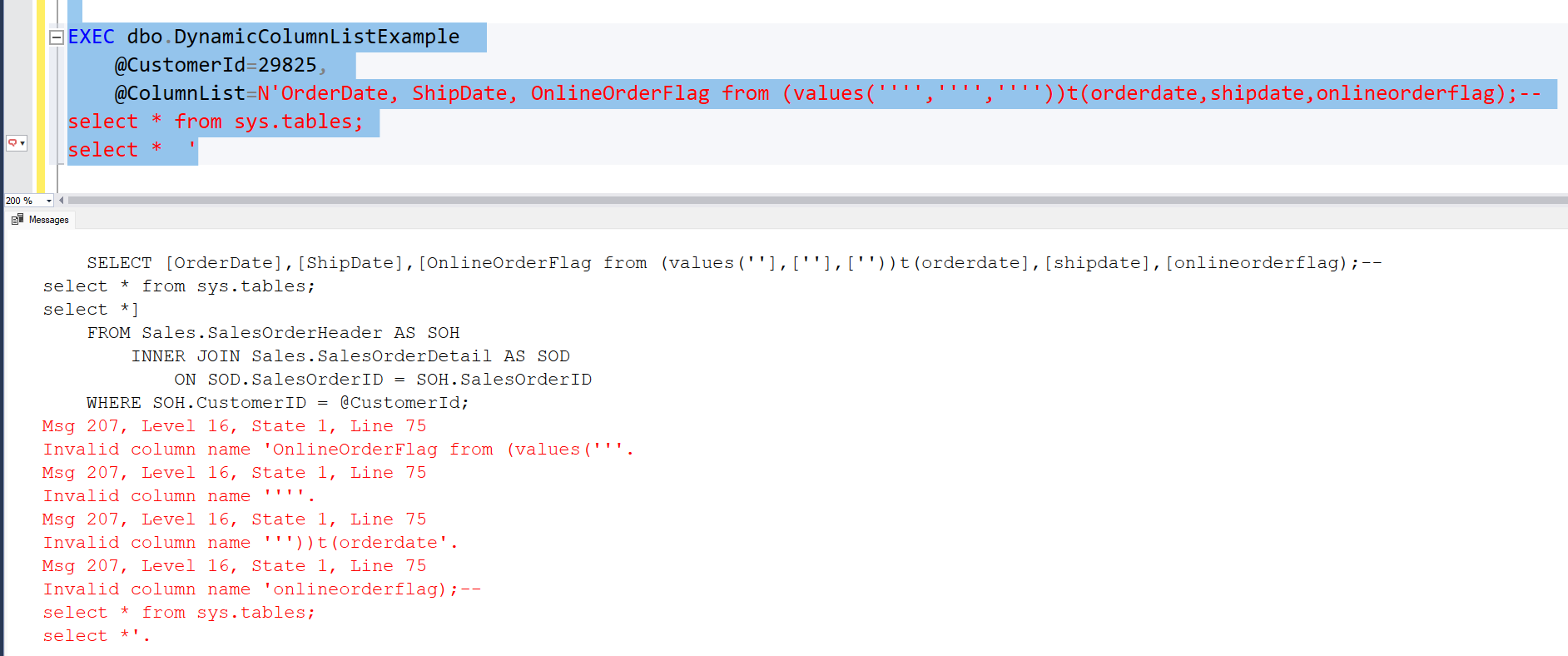

Let’s test it. First without a column list. Then using a column list with good intentions. And finally a column list with bad intentions.

As you can see from the printed SQL-statement, all the column identifiers are quoted and the error messages we get are that there are no columns named “OnlineOrderFlag from (values(”” etc. Meaning all the malicious code that was sent into the column list is translated to quoted identifiers which when used as part of our column list gives error messages. So we stopped an attacker from using a dynamic SQL injection vulnerability.

Read more about SQL Injection and other vulnerabilities on OWASP Top Ten: OWASP Top Ten | OWASP Foundation